Alexander Ellis-Wilson

Web Developer

Born 2002 in England, I believe computing should be accessible and safe for anyone. Graduated in 2024 from Bournemouth University with a BSc in Cyber Forensics and Security.

About me

The intersection of design and technology - wondering how things were made or work and what can be improved with them.

This fact about me drove me to pursue a career in technology and learn as much about its different aspects as possible.

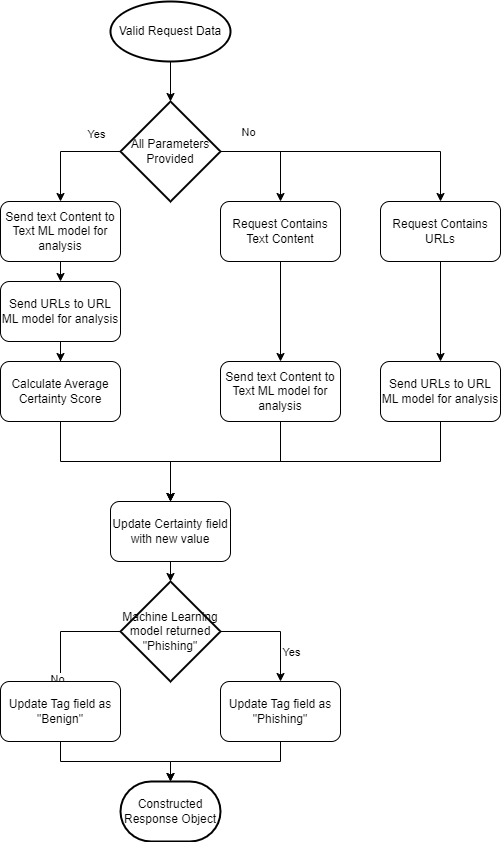

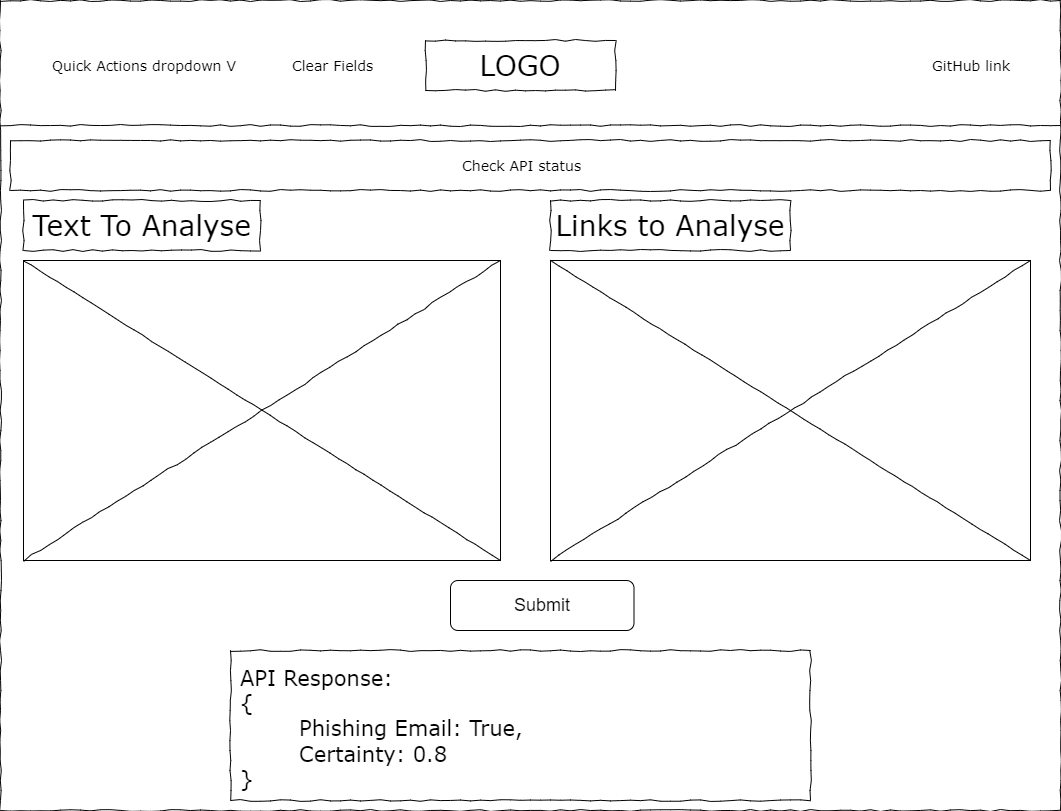

Recently, I graduated from Bournemouth University with a BSc in Cyber Forensics and Security with a First Class with Honours, where I learnt about the malicious side of computing, developing a love for Machine Learning and earning a deeper knowledge of the fundamentals of computers and SIEM (System Information and Event Management).

Playing RPG games like World of Warcraft on my old Pentium laptop, started my fascination for programming and how it could be utilised to create complicated and nuanced things.

Eventually, I was introduced to Python where the spark was truly lit, as with only a simple set of rules, I created the very basics of a text-based adventure game. This trend would continue in college where I began experimenting with 3D graphics in P5.js and basic app development in Java and Android Studio, making the best of the COVID free time.

Experience

"A jack of all trades is a master of none, but oftentimes is better than a master of one." - William Shakespeare

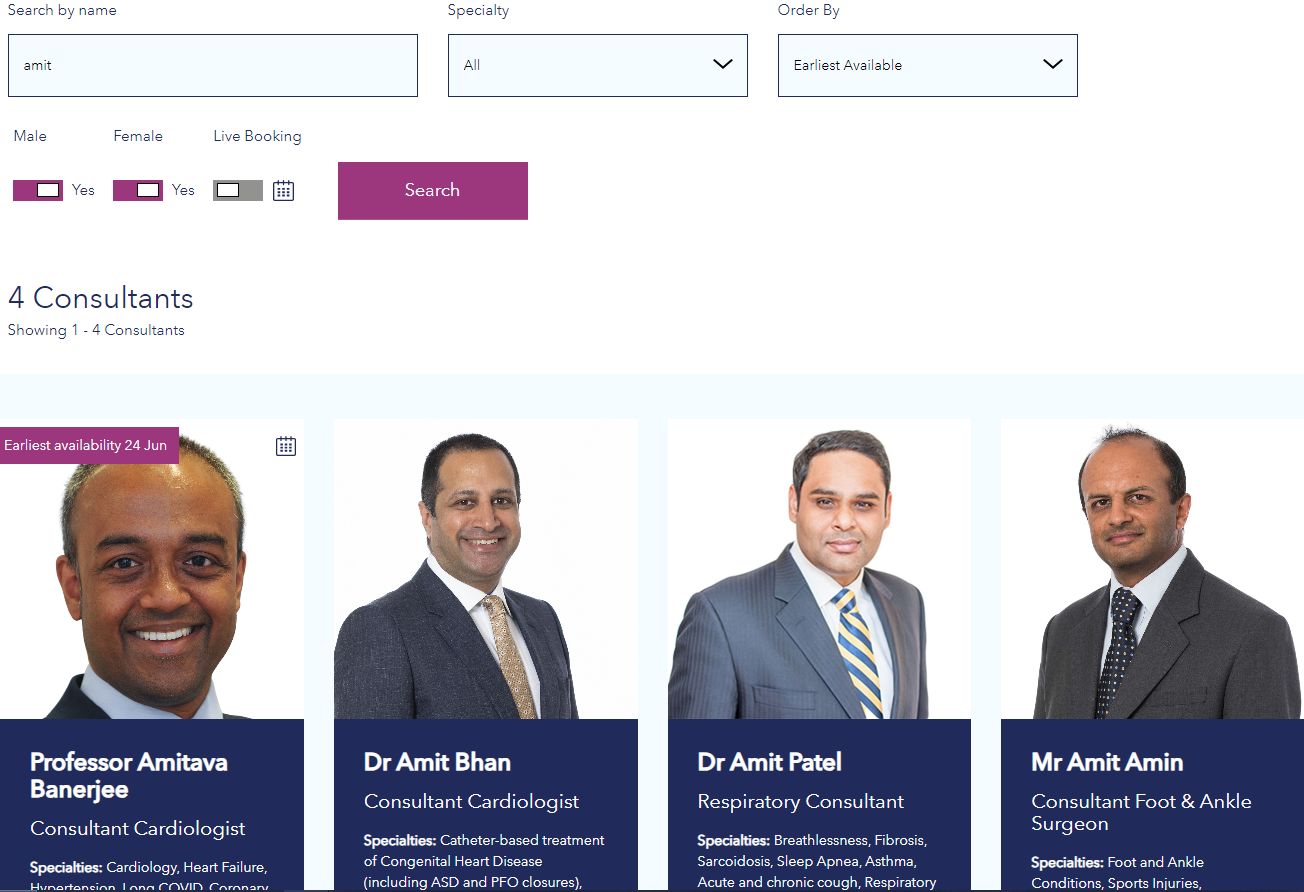

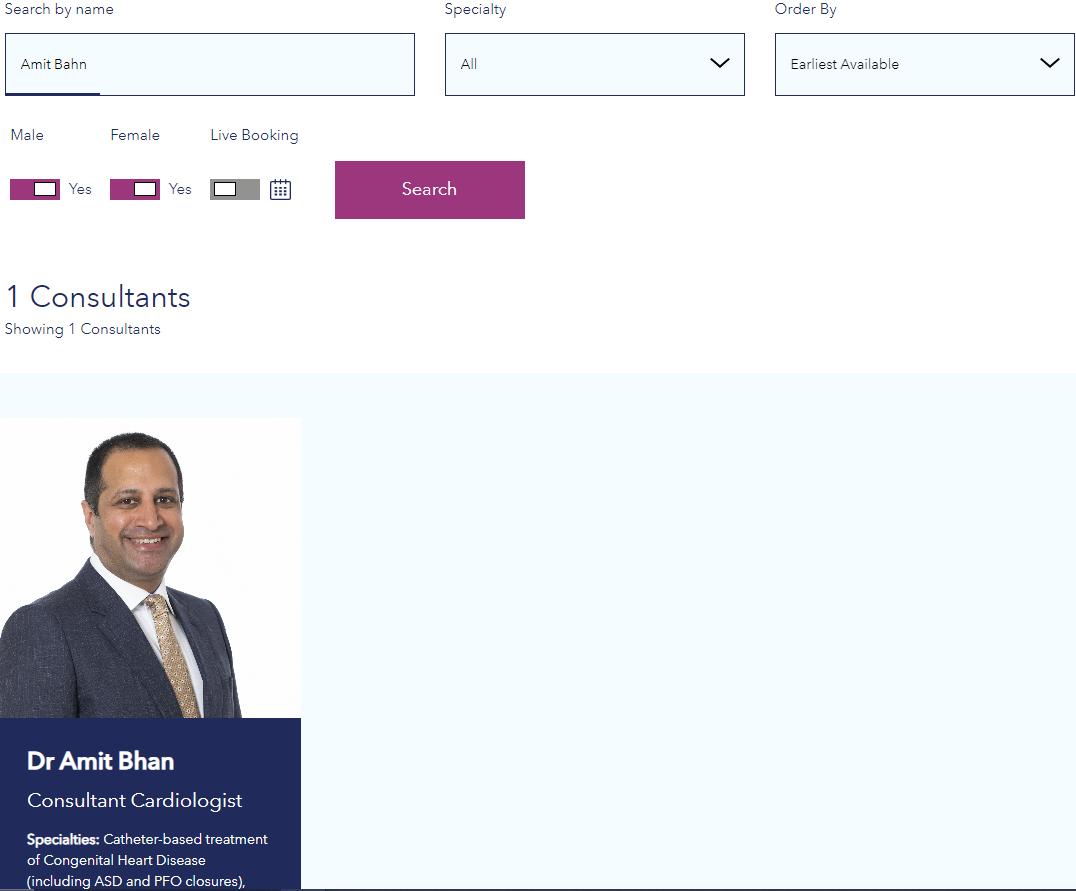

- HTML

- CSS

- SCSS

- JavaScript

- jQuery

- Angular

- Node.js

- nUnit

- .Net Core

- ASP.Net

- MVC

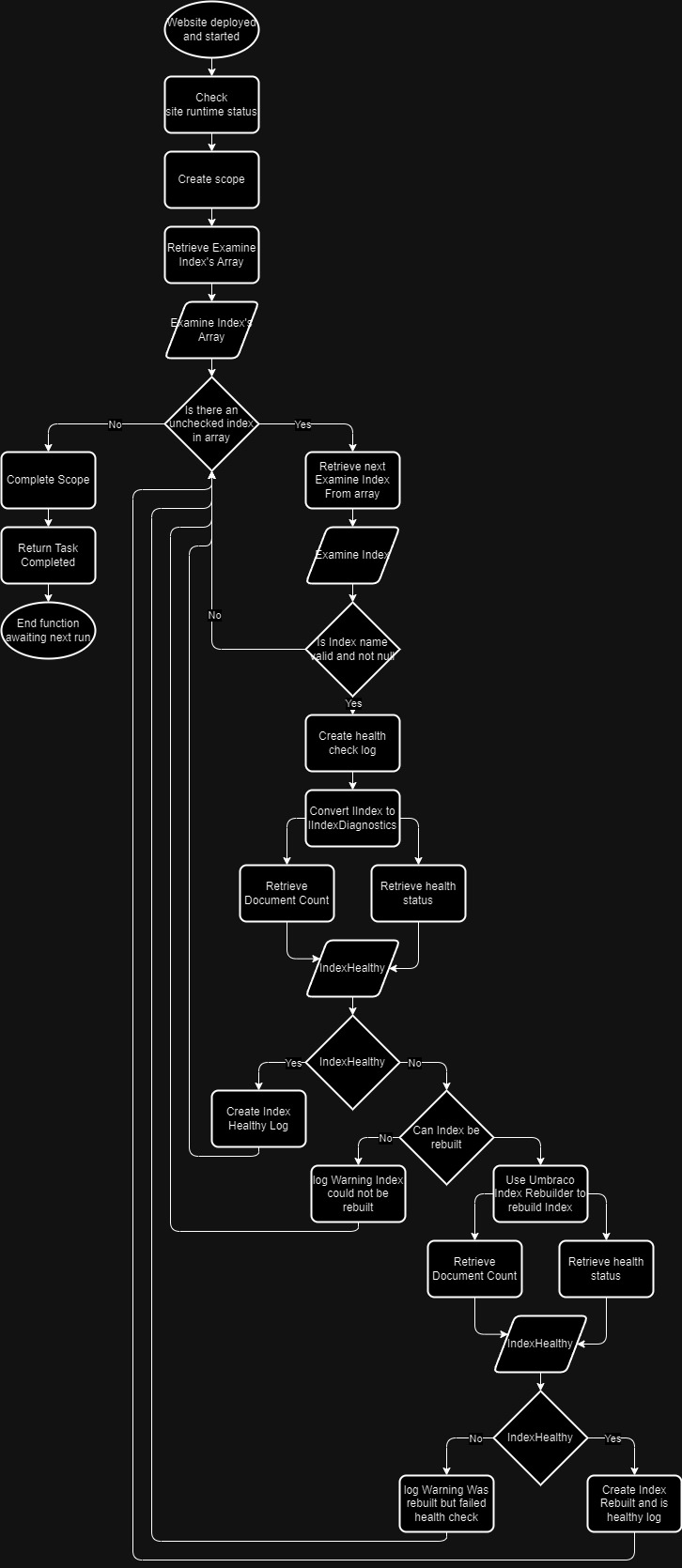

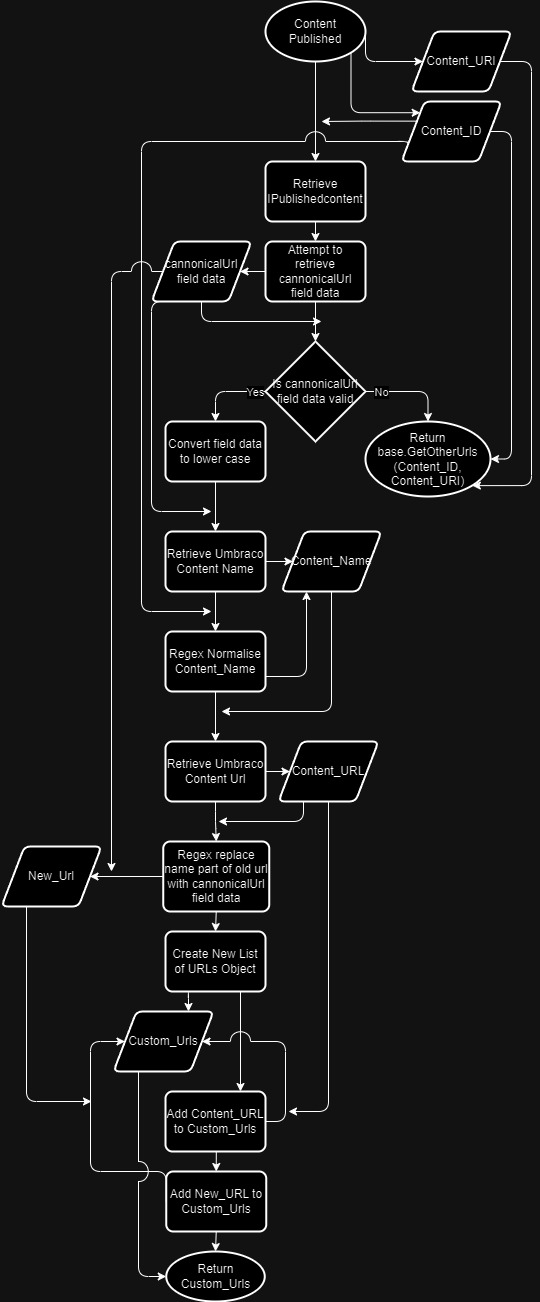

- Umbraco 13-14

- HTML

- CSS

- SCSS

- JavaScript

- TypeScript

- Angular 14

- Node.js

- nUnit

- .Net Core

- ASP.Net

- MVC

- Umbraco 7-13

- Sitecore